Build a LLM (2/2)

Have you ever been curious about what makes large language models (LLMs) tick? Instead of just using them, why not try building one yourself?

This post is based mainly on my own experience while following the steps in the book, "Build a Large Language Model (from scratch)" by Sebastian Raschka.

Pretrain on unlabeled data

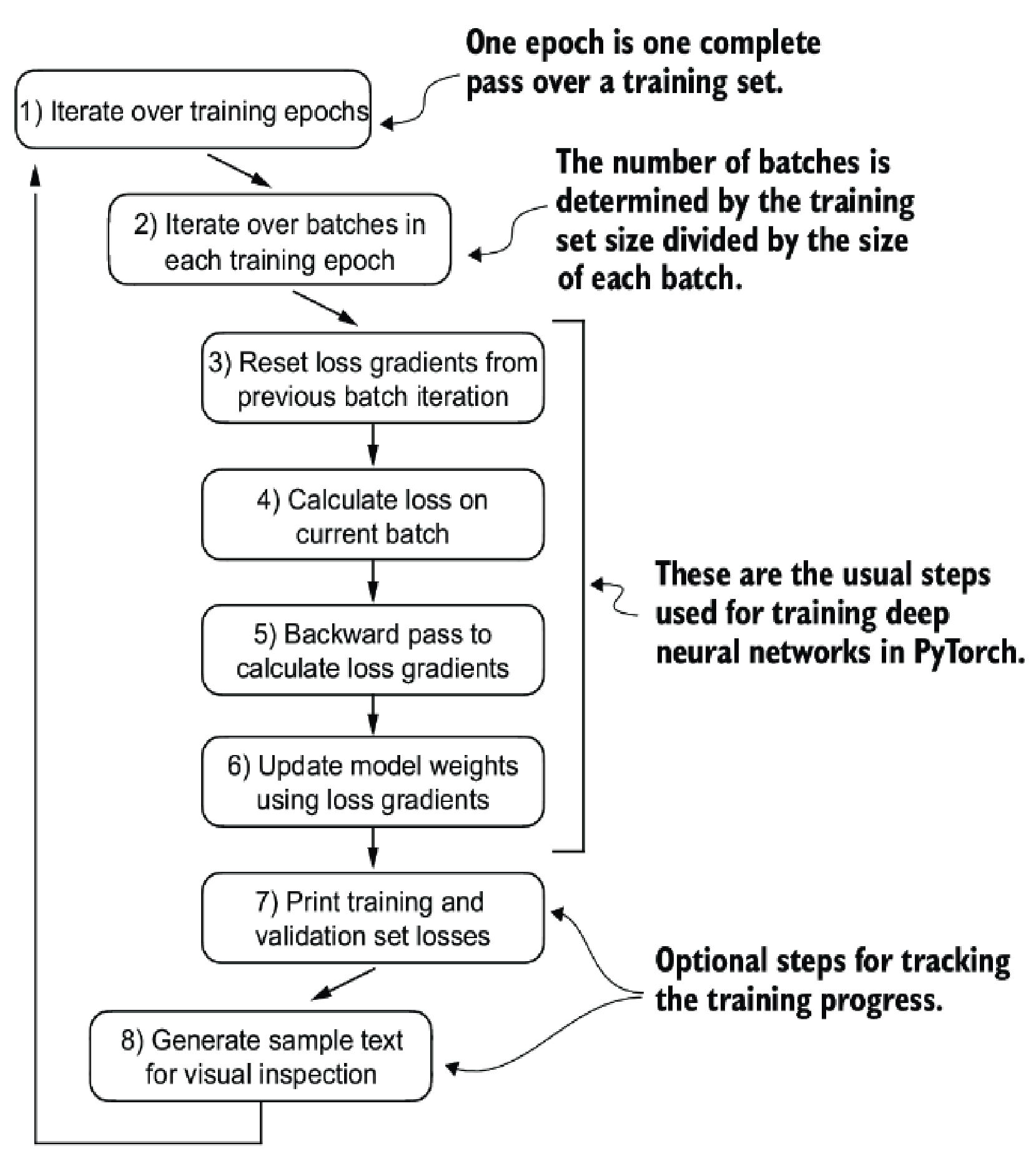

In short, we'll use all the steps we have done so far to make the GPT model to generate text based on the input, then calculate the training and loss by comparing the output with the training data. Then using backpropagation to adjust weight parameters to make the model be better on prediction human-like text.

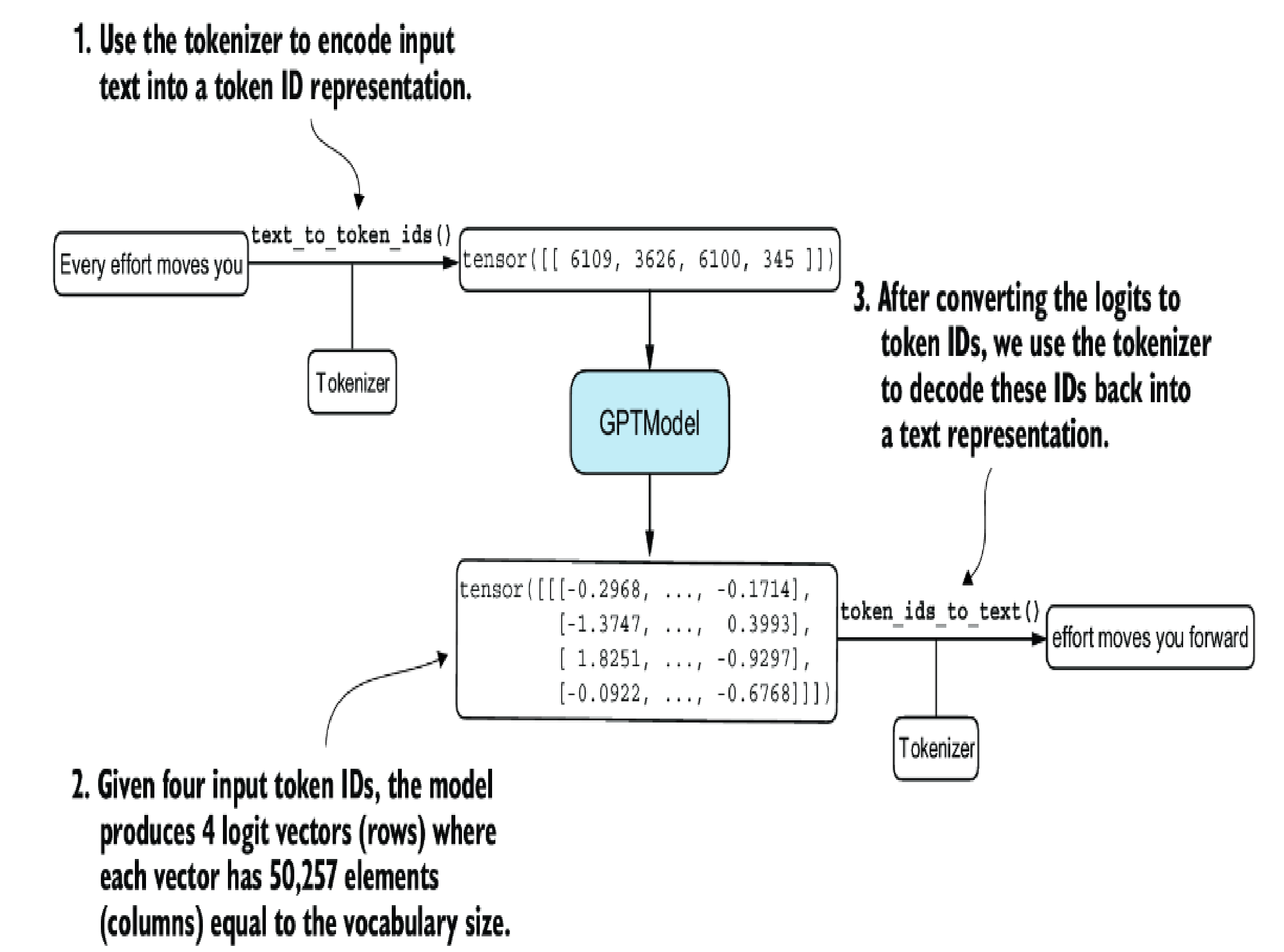

Generate text

Runnable code

import tiktoken

import torch

from config import GPT_CONFIG_124M

from gpt import GPTModel, generate_text_simple

def text_to_token_ids(text, tokenizer):

encoded = tokenizer.encode(text, allowed_special={'<|endoftext|>'})

encoded_tensor = torch.tensor(encoded).unsqueeze(0) #1

return encoded_tensor

def token_ids_to_text(token_ids, tokenizer):

flat = token_ids.squeeze(0) #2

return tokenizer.decode(flat.tolist())

if __name__ == "__main__":

torch.manual_seed(123)

model = GPTModel(GPT_CONFIG_124M)

start_context = "Every effort moves you"

tokenizer = tiktoken.get_encoding("gpt2")

token_ids = generate_text_simple(

model=model,

idx=text_to_token_ids(start_context, tokenizer),

max_new_tokens=10,

context_size=GPT_CONFIG_124M["context_length"]

)

print("Output text:\n", token_ids_to_text(token_ids, tokenizer))generate.py

Evaluate the generated text

When model makes the prediction, it will returns its confidence for all tokens in the vocab library. In order words the command logits = model(inputs) will produce a tensor with shape of (batch_size, num_tokens, vocab_size) into logits. In which, batch_size is the number of sequence of inputs, num_tokens is the number of tokens in each input, and vocab_size is the vocabulary dictionary size.

Different from human behavior, when we tend compare the output with the expectation, in training LLM, the process just doesn't care its output (the final selected tokens), but tell the model which are our expected token probabilities from the digits it has just generated. Then the model will be able learn (or adjust) through the backpropagation process and this process requires a loss function.

Cross Entropy loss

At its core, the cross entropy loss is a popular measure in machine learning and deep learning that measures the difference between two probability distributions—typically, the true distribution of labels (here, tokens in a dataset) and the predicted distribution from a model (for instance, the token probabilities generated by an LLM).

# For the cross_entropy loss function in PyTorch, we want to

# flatten these tensors by combining them over the batch

# dimension

logits_flat = logits.flatten(0, 1)

targets_flat = targets.flatten()

loss = torch.nn.functional.cross_entropy(logits_flat, targets_flat)just a piece of code for understanding

tldr; there is a common misconception of cross entropy loss comparison. It does NOT compare the target token probabilities against the actual produced token probabilities. BUT it calculates the loss based on the target token probabilities with the FULL probability distribution over all tokens in vocab.

Perplexity

Perplexity measures how well the probability distribution predicted by the model matches the actual distribution of the words in the dataset. Similar to the loss, a lower perplexity indicates that the model predictions are closer to the actual distribution.

Backpropagation

This is indeed supported by the pytorch library, we just need 2 steps: loss.backward() to compute the gradient of loss backward through layers for each parameter, but it does not apply the changes. optimizer.step() is the actual application step from those gradient of loss computed just in previous command.

However, in order to apply the changes to the parameter, we also want to have way to optimize it so instead of just apply the gradient, we tend to have param = param - learning_rate * gradient

AdamW

Adam optimizers are a popular choice for training deep neural networks. AdamW is a variant of Adam that improves the weight decay approach, which aims to minimize model complexity and prevent overfitting by penalizing larger weights. This adjustment allows AdamW to achieve more effective regularization and better generalization; thus, AdamW is frequently used in the training of LLMs.

Training loop

import tiktoken

import torch

import os

import time

import urllib.request

import matplotlib.pyplot as plt

from matplotlib.ticker import MaxNLocator

from dataset import create_dataloader_v1

from generate import text_to_token_ids, token_ids_to_text

from gpt import GPTModel, generate_text_simple

def calc_loss_batch(input_batch, target_batch, model, device):

input_batch, target_batch = input_batch.to(device), target_batch.to(device)

logits = model(input_batch)

loss = torch.nn.functional.cross_entropy(logits.flatten(0, 1), target_batch.flatten())

return loss

def calc_loss_loader(data_loader, model, device, num_batches=None):

total_loss = 0.

if len(data_loader) == 0:

return float("nan")

elif num_batches is None:

num_batches = len(data_loader)

else:

# Reduce the number of batches to match the total number of batches in the data loader

# if num_batches exceeds the number of batches in the data loader

num_batches = min(num_batches, len(data_loader))

for i, (input_batch, target_batch) in enumerate(data_loader):

if i < num_batches:

loss = calc_loss_batch(input_batch, target_batch, model, device)

total_loss += loss.item()

else:

break

return total_loss / num_batches

def train_model_simple(model, train_loader, val_loader, optimizer, device, num_epochs,

eval_freq, eval_iter, start_context, tokenizer):

# Initialize lists to track losses and tokens seen

train_losses, val_losses, track_tokens_seen = [], [], []

tokens_seen, global_step = 0, -1

# Main training loop

for epoch in range(num_epochs):

model.train() # Set model to training mode

for input_batch, target_batch in train_loader:

optimizer.zero_grad() # Reset loss gradients from previous batch iteration

loss = calc_loss_batch(input_batch, target_batch, model, device)

loss.backward() # Calculate loss gradients

optimizer.step() # Update model weights using loss gradients

tokens_seen += input_batch.numel()

global_step += 1

# Optional evaluation step

if global_step % eval_freq == 0:

train_loss, val_loss = evaluate_model(

model, train_loader, val_loader, device, eval_iter)

train_losses.append(train_loss)

val_losses.append(val_loss)

track_tokens_seen.append(tokens_seen)

print(f"Ep {epoch+1} (Step {global_step:06d}): "

f"Train loss {train_loss:.3f}, Val loss {val_loss:.3f}")

# Print a sample text after each epoch

generate_and_print_sample(

model, tokenizer, device, start_context

)

return train_losses, val_losses, track_tokens_seen

def evaluate_model(model, train_loader, val_loader, device, eval_iter):

model.eval()

with torch.no_grad():

train_loss = calc_loss_loader(train_loader, model, device, num_batches=eval_iter)

val_loss = calc_loss_loader(val_loader, model, device, num_batches=eval_iter)

model.train()

return train_loss, val_loss

def generate_and_print_sample(model, tokenizer, device, start_context):

model.eval()

context_size = model.pos_emb.weight.shape[0]

encoded = text_to_token_ids(start_context, tokenizer).to(device)

with torch.no_grad():

token_ids = generate_text_simple(

model=model, idx=encoded,

max_new_tokens=50, context_size=context_size

)

decoded_text = token_ids_to_text(token_ids, tokenizer)

print(decoded_text.replace("\n", " ")) # Compact print format

model.train()

def plot_losses(epochs_seen, tokens_seen, train_losses, val_losses):

fig, ax1 = plt.subplots(figsize=(5, 3))

# Plot training and validation loss against epochs

ax1.plot(epochs_seen, train_losses, label="Training loss")

ax1.plot(epochs_seen, val_losses, linestyle="-.", label="Validation loss")

ax1.set_xlabel("Epochs")

ax1.set_ylabel("Loss")

ax1.legend(loc="upper right")

ax1.xaxis.set_major_locator(MaxNLocator(integer=True)) # only show integer labels on x-axis

# Create a second x-axis for tokens seen

ax2 = ax1.twiny() # Create a second x-axis that shares the same y-axis

ax2.plot(tokens_seen, train_losses, alpha=0) # Invisible plot for aligning ticks

ax2.set_xlabel("Tokens seen")

fig.tight_layout() # Adjust layout to make room

plt.savefig("loss-plot.png")

plt.show()

# Note:

# Uncomment the following code to calculate the execution time

start_time = time.time()

file_path = "the-verdict.txt"

url = "https://raw.githubusercontent.com/rasbt/LLMs-from-scratch/main/ch02/01_main-chapter-code/the-verdict.txt"

if not os.path.exists(file_path):

with urllib.request.urlopen(url) as response:

text_data = response.read().decode('utf-8')

with open(file_path, "w", encoding="utf-8") as file:

file.write(text_data)

else:

with open(file_path, "r", encoding="utf-8") as file:

text_data = file.read()

# Train/validation ratio

train_ratio = 0.90

split_idx = int(train_ratio * len(text_data))

train_data = text_data[:split_idx]

val_data = text_data[split_idx:]

GPT_CONFIG_124M = {

"vocab_size": 50257, # Vocabulary size

"context_length": 256, # Context length

"emb_dim": 768, # Embedding dimension

"n_heads": 12, # Number of attention heads

"n_layers": 12, # Number of layers

"drop_rate": 0.1, # Dropout rate

"qkv_bias": False # Query-Key-Value bias

}

torch.manual_seed(123)

model = GPTModel(GPT_CONFIG_124M)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

optimizer = torch.optim.AdamW(model.parameters(), lr=0.0004, weight_decay=0.1)

train_loader = create_dataloader_v1(

train_data,

batch_size=2,

max_length=GPT_CONFIG_124M["context_length"],

stride=GPT_CONFIG_124M["context_length"],

drop_last=True,

shuffle=True,

num_workers=0

)

val_loader = create_dataloader_v1(

val_data,

batch_size=2,

max_length=GPT_CONFIG_124M["context_length"],

stride=GPT_CONFIG_124M["context_length"],

drop_last=False,

shuffle=False,

num_workers=0

)

# Initialize the tokenizer

tokenizer = tiktoken.get_encoding("gpt2")

num_epochs = 10

train_losses, val_losses, tokens_seen = train_model_simple(

model, train_loader, val_loader, optimizer, device,

num_epochs=num_epochs, eval_freq=5, eval_iter=5,

start_context="Every effort moves you", tokenizer=tokenizer

)

epochs_tensor = torch.linspace(0, num_epochs, len(train_losses))

plot_losses(epochs_tensor, tokens_seen, train_losses, val_losses)

# Note:

# Uncomment the following code to show the execution time

end_time = time.time()

execution_time_minutes = (end_time - start_time) / 60

print(f"Training completed in {execution_time_minutes:.2f} minutes.")simple_training.py

Save trained weights

In the context of LLMs and other deep learning models, weights refer to the trainable parameters that the learning process adjusts. These weights are also known as weight parameters or simply parameters. And saving a model simply just saving it's parameters of all layers into a format. With PyTorch, it is relative straightforward:

torch.save(model.state_dict(), "model.pth")save model parameters

Load model checkpoint

model = GPTModel(GPT_CONFIG_124M)

model.load_state_dict(torch.load("model.pth", map_location=device))

model.eval()If we use AdamW for optimizing and want to continue the training process, then we must save the state of AdamW, because it use historical data to adjust learning rates for each model parameter dynamically.

torch.save({

"model_state_dict": model.state_dict(),

"optimizer_state_dict": optimizer.state_dict(),

},

"model_and_optimizer.pth"

)save checkpoint for further processing

checkpoint = torch.load("model_and_optimizer.pth", map_location=device)

model = GPTModel(GPT_CONFIG_124M)

model.load_state_dict(checkpoint["model_state_dict"])

optimizer = torch.optim.AdamW(model.parameters(), lr=5e-4, weight_decay=0.1)

optimizer.load_state_dict(checkpoint["optimizer_state_dict"])

model.train();load checkpoint with optimizer parameters

Decoding strategies to control

randomness

Temperature scaling

Temperature scaling is just a fancy description for dividing the logits by a number greater than 0. So before computing the token probabilities by softmax function after the model has done the prediction.

def softmax_with_temperature(logits, temperature):

scaled_logits = logits / temperature

return torch.softmax(scaled_logits, dim=0)temperature scaling before softmax

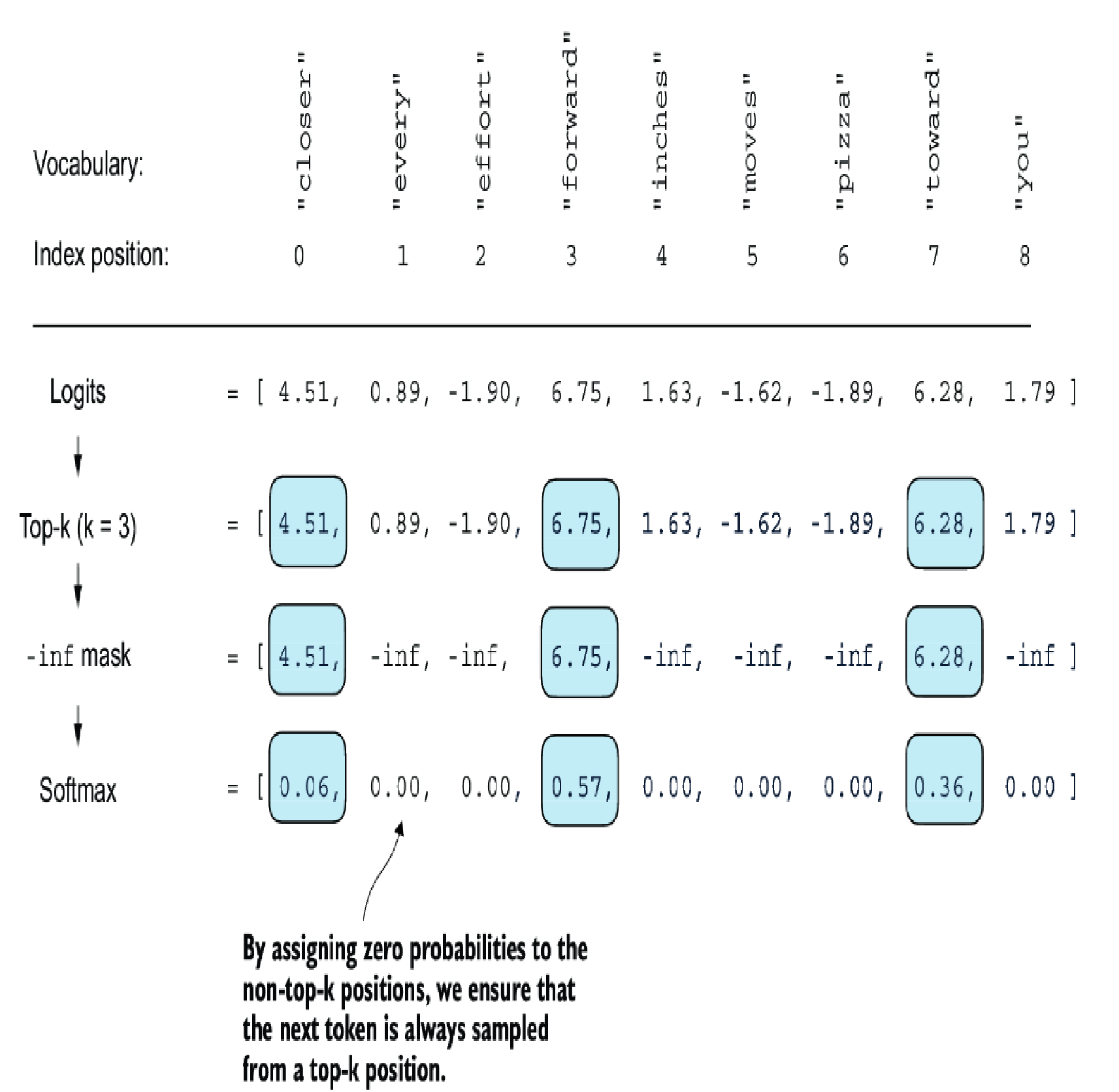

Top-k sampling

Top-k sampling, when combined with probabilistic sampling and temperature scaling, can improve the text generation results. In top-k sampling, we can restrict the sampled tokens to the top-k most likely tokens and exclude all other tokens from the selection process by masking their probability scores.

Runnable code

import tiktoken

import torch

from generate import text_to_token_ids, token_ids_to_text

from gpt import GPTModel

def generate(model, idx, max_new_tokens, context_size, temperature=0.0, top_k=None, eos_id=None):

# For-loop is the same as before: Get logits, and only focus on last time step

for _ in range(max_new_tokens):

idx_cond = idx[:, -context_size:]

with torch.no_grad():

logits = model(idx_cond)

logits = logits[:, -1, :]

# New: Filter logits with top_k sampling

if top_k is not None:

# Keep only top_k values

top_logits, _ = torch.topk(logits, top_k)

min_val = top_logits[:, -1]

logits = torch.where(logits < min_val, torch.tensor(float("-inf")).to(logits.device), logits)

# New: Apply temperature scaling

if temperature > 0.0:

logits = logits / temperature

# Apply softmax to get probabilities

probs = torch.softmax(logits, dim=-1) # (batch_size, context_len)

# Sample from the distribution

idx_next = torch.multinomial(probs, num_samples=1) # (batch_size, 1)

# Otherwise same as before: get idx of the vocab entry with the highest logits value

else:

idx_next = torch.argmax(logits, dim=-1, keepdim=True) # (batch_size, 1)

if idx_next == eos_id: # Stop generating early if end-of-sequence token is encountered and eos_id is specified

break

# Same as before: append sampled index to the running sequence

idx = torch.cat((idx, idx_next), dim=1) # (batch_size, num_tokens+1)

return idx

if __name__ == "__main__":

torch.manual_seed(123)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

checkpoint = torch.load("model_and_optimizer.pth", map_location=device)

# This config must be exactly same as the trained one.

GPT_CONFIG_124M = {

"vocab_size": 50257, # Vocabulary size

"context_length": 256, # Context length

"emb_dim": 768, # Embedding dimension

"n_heads": 12, # Number of attention heads

"n_layers": 12, # Number of layers

"drop_rate": 0.1, # Dropout rate

"qkv_bias": False # Query-Key-Value bias

}

model = GPTModel(GPT_CONFIG_124M)

model.load_state_dict(checkpoint["model_state_dict"])

# Using model.eval()

# switches the model to evaluation mode for inference,

# disabling the dropout layers of the model

model.eval()

tokenizer = tiktoken.get_encoding("gpt2")

token_ids = generate(

model=model,

idx=text_to_token_ids("Every effort moves you", tokenizer),

max_new_tokens=15,

context_size=GPT_CONFIG_124M["context_length"],

top_k=25,

temperature=1.4

)

print("Output text:\n", token_ids_to_text(token_ids, tokenizer))generate text with temperature and top-k sampling

Comments ()